Like many emerging technologies, the marketing narrative and hype for AI technology deployment on Kubernetes is ahead of reality. Survey data from industry reports show that 90 percent of enterprises expect their AI workloads on Kubernetes to grow over the next 12 months, and 54% of enterprises say they already run some AI ML workloads on Kubernetes clusters. However, a very low percentage of companies have currently deployed AI-powered systems like knowledge platforms by using Large Language Models (LLMs) and Retrieval-Augmented Generation Systems (RAGs) in their production environments.

The potential for AI in Kubernetes is large but not mainstream yet. Many teams are still experimenting, consuming AI via APIs (e.g. Open AI), or running only partial RAG pipelines for testing purposes. This article will provide an introductory framework for protecting and securing Kubernetes AI workloads that are derived from LLMs and RAG systems deployed in Kubernetes environments.

The following best practices should be employed as a strategic guide for teams to be prepared for the future state of their environment and able to pivot quickly when LLMs and RAGs are more widely adopted.

AI Elements in Context: LLMs, RAGs, and Vector Databases

The emergence of LLMs and RAG systems will transform the landscape of enterprise AI. These technologies enable advanced tasks that range from customer support automation to business analytics. Kubernetes will become the platform of choice for deploying and scaling these AI workloads due to its flexibility and orchestration capabilities. However, this convergence introduces new data protection and security challenges that require specialized, layered defenses to protect sensitive application data and ensure regulatory compliance.

LLMs are AI models that are trained on extensive datasets that can understand and generate human language. Their data sensitivities include exposure to confidential prompts, training data, and generated outputs that may contain proprietary or regulated information. RAG architecture enhances LLMs by integrating external knowledge bases or document stores, which allows for the dynamic retrieval of relevant information. They fetch up-to-date information from an external source like a vector database or search engine and provide it to the LLM as context for generating more accurate and grounded responses. This increases both the value and attack surface of the AI system.

AI data assets that need protection include model weights and architecture files, embeddings and intermediate representations, user prompts and queries, retrieved documents and knowledge base content, as well as model outputs and responses. These are often stored in vector databases that are designed to store, index, and search high-dimensional vectors that are used to represent unstructured data like text, images, audio, or video. There are several reasons that it is critical to protect these data types, including:

- Cost

- AI data takes resources (e.g., GPU time, engineer cost, and system resources) to produce and losing that data and paying to re-produce it is cost prohibitive.

- Regulatory requirements

- Compliance with standards such as GDPR, HIPAA, and CCPA legally mandate data privacy, transparency, and accountability for all components of the AI pipeline.

- Corporate data lineage and audit trail standards

- Complete data provenance and sourcing documentation is increasingly becoming an integral part of the corporate audit process.

- Mission criticality to the enterprise

- As more AI workloads become mission-critical to your business, the loss of this data becomes a risk the enterprise cannot afford. This type of resilience also becomes a must-have as extended outages or performance issues can have significant business impact.

Kubernetes and AI: A Perfect Match?

Kubernetes offers essential orchestration capabilities for deploying and managing containerized AI components. These include inference servers for LLMs, document parsers, vector databases, API gateways, load balancers, and observability and security agents. In the context of Kubernetes, LLMs and RAG systems are increasingly being integrated into systems and workflows to enhance automation, observability, and developer/operator productivity. When used together, Kubernetes and AI can be a strong asset in the enterprise AI/ML Ops toolbox.

The Threat Landscapes for AI Data in Kubernetes

To ensure success, it is critical that you understand the threats that are endemic to both Kubernetes and emerging AI models, as well as be familiar with what security enhancements can combat these threats.

First, the shared responsibility nature of Kubernetes introduces complexity. For example, secrets may be stored in etcd, pods may run with excessive privileges, and network boundaries may be undefined. Secondly, Kubernetes lacks built-in data protection. This makes purposeful and planned backup and recovery of AI elements critical.

Specific Kubernetes-native threats include:

- Misconfigured secrets and volumes: Poorly managed secrets or persistent volumes can lead to leakage of credentials and sensitive data.

- Insecure service mesh and ingress configurations: Open or misconfigured network paths can expose internal APIs and services.

- Privilege escalation and container breakout: Vulnerable containers may be exploited to gain access to the underlying host or other workloads.

- Supply chain risks: Use of untrusted or compromised images, libraries, or pre-trained models.

LLMs and RAGs deployed in Kubernetes are vulnerable due to the complexity and dynamic nature of both the models and the orchestration environment. Additionally, the integration of external data sources in RAG systems increases the attack surface and makes them susceptible to security threats. Without rigorous security policies, monitoring, and regular updates, these systems can become high-value targets due to the sensitive data they process and generate.

Attack vectors that are specific to LLMs and RAGs include:

- Data poisoning: Malicious actors inject incorrect, misleading, or harmful data into the training or fine-tuning of data sets used by LLMs or into document stores that are used by RAG systems. For example, Kubernetes misconfigurations (e.g., open access to persistent volumes or storage buckets) may allow attackers to tamper with data used during retrieval. This is the AI equivalent of software supply chain cyberattacks, which target processes and tools that are used to develop and build software and compromise them before it reaches the end user.

- Prompt injection: This occurs when user inputs are crafted to manipulate the model’s behavior in unintended ways. This is especially common in RAGs, where retrieved text can include malicious instructions. A compromised microservice (e.g., front-end pod) might relay malicious user prompts without sanitization.

- Model exfiltration: This includes stealing or leaking the model (or its parameters) through access to internal components or outputs, which may be proprietary or confidential. Attackers might, for example, gain access via exposed pods, containers, or APIs (especially if role-based access control (RBAC) is weak).

- Unauthorized access to retrieved documents: Attackers access documents from the vector store or document database that are used for grounding responses in RAG systems. Inadequate network policies or secrets management may allow pods or users to access document storage.

Addressing the Threats

Multiple technology enhancements and best practices, if proactively applied by teams, can help reduce these Kubernetes and AI model-specific threat vectors. Security enhancements and proactive threat defense features for native Kubernetes environments include:

| Defense Type | Description |

| Pod Security and Admission Controllers | Enforce pod security standards to restrict privilege escalation and unsafe host access.

Use admission controllers to validate resource configurations before deployment. |

| Network Policies | Define strict network policies to isolate LLM inference services from retrieval components and external endpoints. |

| Service Mesh | Enable zero-trust networking, mandatory encryption, and fine-grained access control between microservices. |

| Workload Identity and Service Account Security | Assign unique service accounts with scoped permissions and regularly rotate credentials. |

| Image Scanning and Supply Chain Validation | Continuously scan container images for vulnerabilities.

Use signed images and maintain a software bill of materials (SBOM) for all dependencies. |

| Auditing, Monitoring, and Incident Response | Real-time telemetry and logging: Capture detailed logs for model interactions, data flows, and system events. Behavioral analytics: Comprehensive auditing: Incident response playbooks: |

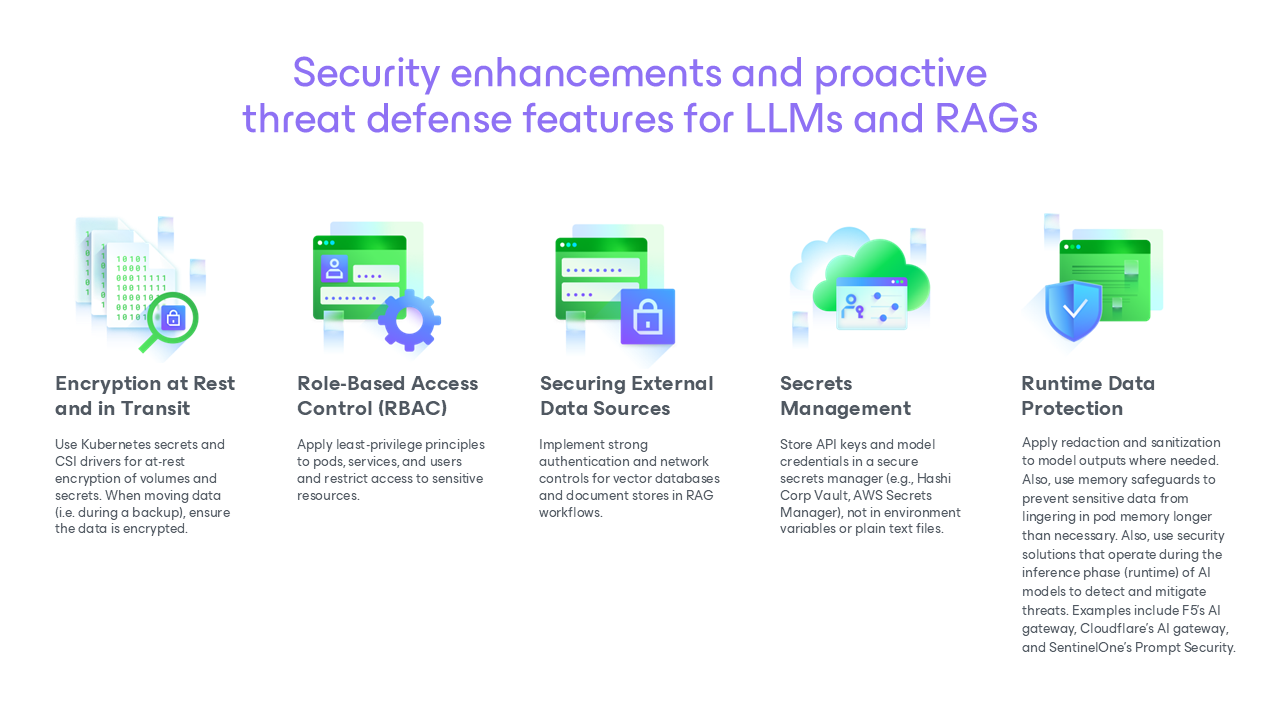

Security and proactive threat defense for LLMs and RAGs

AI Application Security: Strong Data Protection is a Must

Kubernetes application data protection is key for AI data and requires a well thought out strategy. Using a solution like Veeam Kasten helps ensure your AI data is secure. Also, with Veeam Kasten, cloud native tools, (e.g. F5’s AI gateway, listed above), can automatically trigger a Veeam Kasten backup or restore action when a threat is detected to help ensure a strong security profile.

Act Now to Ensure Success

Securing LLM and RAG workloads in Kubernetes requires a holistic, proactive approach. This includes adopting a layered defense strategy with Kubernetes-native controls, which designs secure AI pipelines end-to-end from data ingestion to model serving. It also helps maintain strong governance and fosters DevSecOps and MLOps collaboration to ensure security is a shared responsibility. By following this framework, organizations can confidently deploy AI systems that are resilient, compliant, and trustworthy.

Secure your Kubernetes AI data

With Veeam Kasten, you can back up, recover, and secure Kubernetes workloads with confidence and compliance.

Resources

For more information on how to build a robust cloud native AI protection strategy, see these two recorded webinars and demos.

- AI Ready Resilience: Protect Your Cloud-Native Apps and Data

- Ensuring Cloud Native Resilience in the AI and quantum Era

The post Securing AI in Kubernetes: A Guide to Getting Started with LLMs and RAGs appeared first on Veeam Software Official Blog.

from Veeam Software Official Blog https://ift.tt/IpsZ4PE

Share this content: